To say that I’m distrusting of Microsoft Windows’ security is putting things lightly. And when I’m in a situation where Microsoft’s anti-open standards force Microsoft as a necessity, I tend to use a virtual machine to sandbox its activities.

On Mac OS X, I use a wonderful product called Parallels, which has the added bonus of being able to drag’n’drop files and directories between the guest operating system (Windows) and the host operating system (OS X).

After installing the latest Snow Leopard (10.6), I found that while I could drag files into Windows from OS X, the reverse was no longer true. Dragging something from the Windows desktop out to the OS X desktop, which used to work in Leopard (10.5), simply results in nothing happening.

Now, I’m aware that Apple did some pretty big changes under the hood in Snow Leopard. And, I’m aware that even the Finder got a fairly intensive overhaul. And, I’m even willing to accept that there might be bumps during the transition process, as the good folks at Parallels update their product to address little tidbits like this.

However, I’m kinda surprised that this kind of thing snuck past testing. Even more to my surprise is that I don’t hear many people talking about it. Such conclusions lead me to think that perhaps I have a local configuration issue.

But then I heard from another user of Parallels that updated to Snow Leopard. He ran into the same problem: Drag’n’Drop worked only in one direction now.

Most of the Snow Leopard fuss currently centers on the fact that Parallels 2.x and 3.x no longer work under Snow Leopard. Parallels made such a good and stable product that early users saw no need to update as it met their needs. However, Apple’s approach to operating systems is far more progressive than Microsoft’s, as they are willing to sacrifice backwards compatibility in software and hardware, if the technology is substantially old and the new benefits far outweigh the trouble. Thus, Apples tends to fix problems, rather than bandaid-ing workarounds; in the long haul everyone benefits with faster, smaller, more featured applications instead of bloatware.

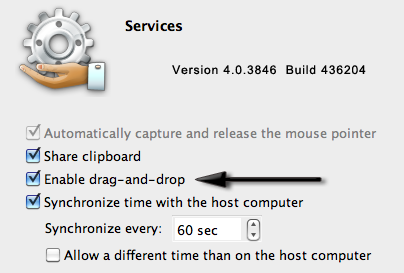

However, I’m riding the Parallels 4.x wave on the bleeding edge. I’ve got the Parallels Tools installed. I’ve got the Enable Drag’n’Drop checked in the Shared Services config. Still, nothing.

I did a little digging around and found one user, Jamie Daniel, who was experiencing the same problem. As his question went unanswered, I tried myself.

I wrote an entry in the Parallels forum entitled Drag files from Guest to Host no longer working, detailing the problem.

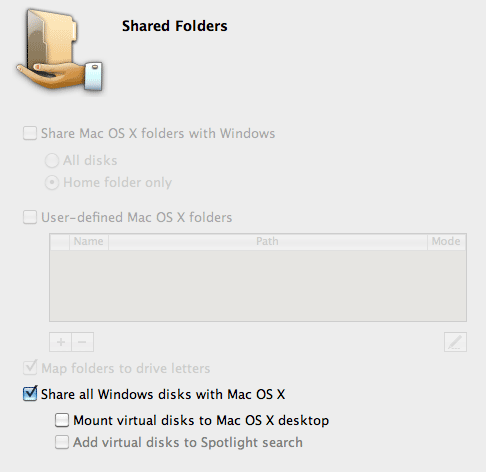

And, while I was luckier than Jamie and got an answer, it was fairly clear someone gave a cursory glance and cut’n’pasted a response without reading what I was asking. In short that I did not want Windows to be able to read or write to any OS X drives. For, should Windows get a virus, I didn’t want it having free reign of the OS X filesystem to corrupt. Thus only I, via Drag’n’Drop, should be able to marshal content between the two environments.

Willing to accept the fact that I may have a configuration problem, despite being a power user of Parallels since day one, I am also willing to accept that this is simply a Snow Leopard compatibility issue that Parallels will soon be addressing. Problem is, I can’t seem to raise the issue to a level where someone can confirm or deny it.

Worse yet, I can’t seem to be able to login to Windows via the Finder anymore to mouse a Windows disk within OS X, where as I used to be able to do that as well. While workarounds, from using a USB disk (which mounts in both environments), DropBox, and using the Windows Guest account’s Parallel’s mount point, I’d really like the old capability back.

So, I ask, Parallels 4.x users that are using Snow Leopard, are you no longer able to drag from Windows to the OS X desktop?

If you can, how are you doing it?

If you can’t, please head over to the Parallels forum and let them know it’s broken for you as well. This is not an attack Parallels request, they’re good people — this is just to raise awareness to let them know the issue is real so they can look into it.

UPDATE 14-Sep-2009: Found a work around, but I’m not happy about it. What I don’t like about it is that it appears to expose Windows disks to OS X. While I trust OS X, the inverse does not appear to be necessary to perform a Drag’n’Drop from OS X to Windows. I’d expect the Enable Drag-and-Drop to be enough.

If you turn on the Share All Disks with OS X, then Drag’n’Drop from the Windows desktop to OS X Desktop works.